There are many ethical considerations related to emerging technology, and the scale and application of AI brings with it unique and unprecedented challenges such as privacy, bias and discrimination, economic power, and fairness. Below are some of the ethical concerns with AI-

Concerns over Data Privacy and Security

A frequently cited issue is privacy and data protection. There are several risks related to AI-based Machine Learning. ML needs large data sets for training purposes, while access to those data sets raises questions.

A frequently cited issue is privacy and data protection. There are several risks related to AI-based Machine Learning. ML needs large data sets for training purposes, while access to those data sets raises questions.

An additional problem arises with regard to AI and pattern detection. This AI feature may pose privacy risks even if the AI has no direct access to personal data. An example of this is demonstrated in a study by Jernigan and Mistree where AI can identify sexual orientation from Facebook friendships. The notion that individuals may unintentionally ‘leak’ clues to their sexuality in digital traces is a cause for worry, especially to those who may not want this information out there. Likewise, Machine Learning capabilities also enable potential re-identification of anonymized personal data.

While most jurisdictions have established data protection laws, evolving AI still has the potential to create unforeseen data protection risks creating new ethical concerns. The biggest risk lies with how some organizations collect and process vast amounts of user data in their AI-based system without customer knowledge or consent, resulting in social consequences.

Treating Data as a Commodity

Much of the current discourse around information privacy and AI does not take into account the growing power asymmetry between institutions that collect data and the individuals generating it. Data is a commodity, and for the most part, people who generate data don’t fully understand how to deal with this.

the growing power asymmetry between institutions that collect data and the individuals generating it. Data is a commodity, and for the most part, people who generate data don’t fully understand how to deal with this.

AI systems that understand and learn how to manipulate people’s preferences exacerbate the situation. Every time we use the internet to search, browse websites, or use mobile apps, we give away data either explicitly or unknowingly. Most of the time, we allow companies to collect and process data legally when we agree to terms and conditions. These companies are able to collect user data and sell it to third parties. There have been many instances where third-party companies have scrapped sensitive user data via data breaches, such as the 2017 Equifax case where a data breach made sensitive data, which included credit card numbers and social security numbers of approximately 147 million users, public and open for exploitation.

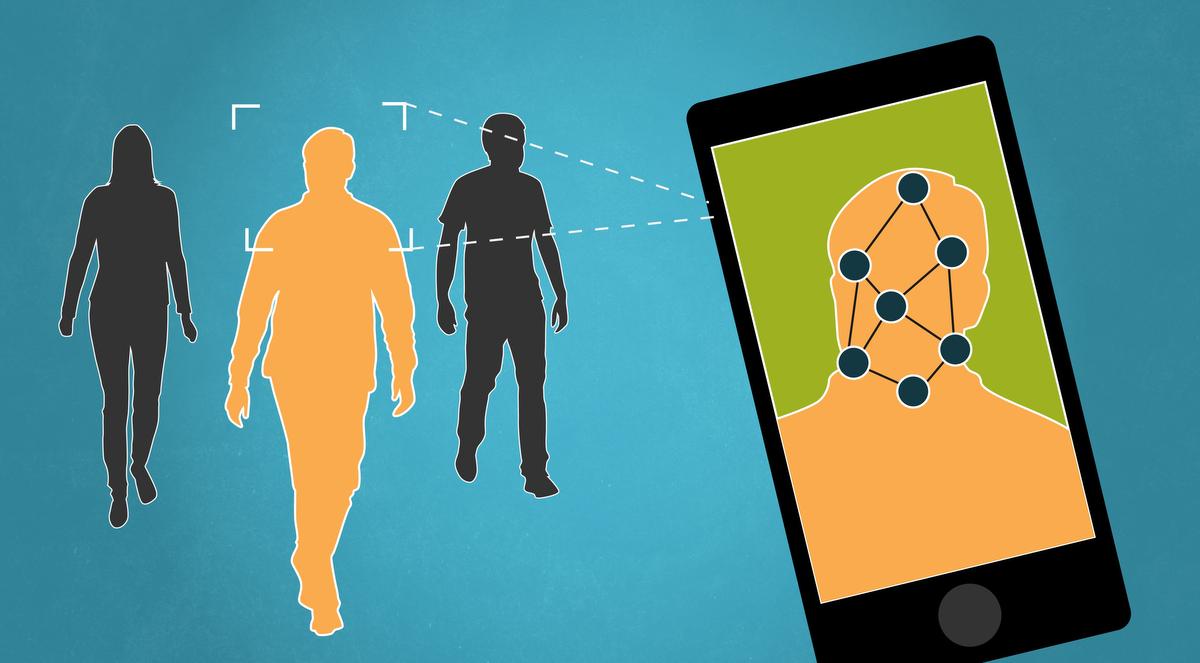

Ethical Concerns with AI over Bias and Discrimination

Technology is not neutral—it is as good or bad as the people who develop it. Much of human bias can be transferred to machines. One of the key challenges is that ML systems can, intentionally or inadvertently, result in the reproduction of existing biases.

Technology is not neutral—it is as good or bad as the people who develop it. Much of human bias can be transferred to machines. One of the key challenges is that ML systems can, intentionally or inadvertently, result in the reproduction of existing biases.

Examples of AI bias and discrimination are the 2014 case where a team of software engineers at Amazon building a program to review resumes realized that the system discriminated against women for technical roles.

Empirical evidence exists when it comes to AI bias in regards to demographic differentials. Research conducted by the National Institute of Standards and Technology (NIST) evaluated facial-recognition algorithms from around 100 developers from 189 organizations, including Microsoft, Toshiba, and Intel, and found that contemporary face recognition algorithms exhibit demographic differentials of various magnitudes, with more false positives than false negatives. Another example is the 2019 case of legislation vote in San Francisco where the use of facial recognition was voted against, as they believed AI-enabled facial recognition software was prone to errors when used on people with dark skin or women.

Discrimination is illegal in many jurisdictions. Developers and engineers should design AI systems and monitor algorithms to operate on an inclusive design that emphasizes inclusion and consideration of diverse groups.

Ethical Concerns with AI over Unemployment and Wealth Inequality

The fear that AI will impact employment is not new. According to the most recent McKinsey Global Institute report, by 2030 about 800 million people will lose their jobs to AI-driven robots. However, many AI experts argue that jobs may not disappear but change, and AI will also create new jobs. Moreover, they also argue that if robots take the jobs, then those jobs are too menial for humans anyway.

Global Institute report, by 2030 about 800 million people will lose their jobs to AI-driven robots. However, many AI experts argue that jobs may not disappear but change, and AI will also create new jobs. Moreover, they also argue that if robots take the jobs, then those jobs are too menial for humans anyway.

Another issue is wealth inequality. Most modern economic systems compensate workers to create a product or offer a service. The company pays wages, taxes, and other expenses, and injects the left-over profits back into the company for production, training, and/or creating more business to further increase profits. The economy continues to grow in this environment. When we introduce AI into the picture, it disrupts the current economic flow. Employers do not need to compensate robots, nor pay taxes. They can contribute at a 100% level with a low ongoing cost. CEOs and stakeholders can keep the company profits generated by the AI workforce, which then leads to greater wealth inequality.

Concern over Concentration of Economic Power

The economic impacts of AI are not limited to employment. A concern is that of the concentration of economic (and political) power. Most, if not all, current AI systems rely on large computing resources and massive amounts of data. The organizations that own or have access to such resources will gain more benefits than those that do not. Big tech companies hold the international concentration of such economic power. Zuboff’s concept of “surveillance capitalism” captures the fundamental shifts in the economy facilitated by AI.

The economic impacts of AI are not limited to employment. A concern is that of the concentration of economic (and political) power. Most, if not all, current AI systems rely on large computing resources and massive amounts of data. The organizations that own or have access to such resources will gain more benefits than those that do not. Big tech companies hold the international concentration of such economic power. Zuboff’s concept of “surveillance capitalism” captures the fundamental shifts in the economy facilitated by AI.

The development of such AI-enabled concentrated power raises the question of fairness when large companies exploit user data collected from individuals without compensation. Not to mention, companies utilize user insights to structure individual action, reducing the average person’s ability to make autonomous choices. Such economic issues thus directly relate to broader questions of fairness and justice.

Ethical Concerns with AI in Legal Settings

Another debated ethical issue is legal. The use of AI can broaden the biases for predictive policing or criminal probation services. According to a report by SSRN, law enforcement agencies increasingly use predictive policing systems to predict criminal activity and allocate police resources. The creators build these systems on data produced during documented periods of biased, flawed, and sometimes unlawful practices and policies.

At the same time, the entire process is interconnected. The policing practices and policies shape the data creation methodology, raising the risk of creating skewed, inaccurate, or systematically biased data. If predictive policing systems are ingested with such data, they cannot break away from the legacies of unlawful or biased policing practices that they are built on. Moreover, claims by predictive policing vendors do not provide sufficient assurance that their systems adequately mitigate the data either.

Concerns with the Digital Divide

AI can exacerbate another well-established ethical concern, namely the digital divide. Divides between countries, gender, age, and rural and urban settings, among others, are already well-established. AI can further exacerbate this. AI is also likely to have impacts on access to other services, thereby potentially further excluding segments of the population. Lack of access to the underlying technology can lead to missed opportunities.

between countries, gender, age, and rural and urban settings, among others, are already well-established. AI can further exacerbate this. AI is also likely to have impacts on access to other services, thereby potentially further excluding segments of the population. Lack of access to the underlying technology can lead to missed opportunities.

In conclusion, the ethical issues that come with AI are complex. The key is to keep these issues in mind when developing and implementing AI systems. Only then can we analyze the broader societal issues at play. There are many different angles and frameworks while debating whether AI is good or bad. No one theory is the best either. Nevertheless, as a society, we need to keep learning and stay well-informed in order to make good future decisions.

Furthermore, you can also check our previous blog for more information on AI Ethics: Ethics of Artificial Intelligence.

The Fuse.ai center is an AI research and training center that offers blended AI courses through its proprietary Fuse.ai platform. The proprietary curriculum includes courses in Machine Learning, Deep Learning, Natural Language Processing, and Computer Vision. Certifications like these will help engineers become leading AI industry experts. They also aid in achieving a fulfilling and ever-growing career in the field.